To develop trustworthy AI agents, unit testing is essential to ensure their efficiency and quality across a variety of use cases. Langfuse offers robust mechanisms and systems to streamline and optimize such a process. This tutorial will take you through best practices, tools, and techniques for unit testing AI agents to maximize your development productivity and the reliability of your system.

Unit testing is an essential practice in software development that aims to ensure that every part of a system behaves as expected. In the case of AI agents, unit testing would be complex, focusing not only on code validation but also on model behavior, decision-making, and response consistency.

The most important reasons to believe unit testing is essential when it comes to AI agents are:

In the absence of systematic unit testing, AI agents will exhibit erratic behavior, undermining user experience, system reliability, and corporate performance.

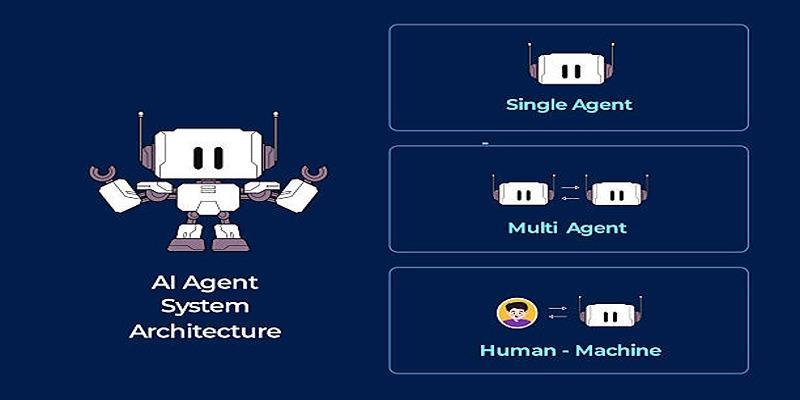

Developers need to pay attention to the following components to test AI agents effectively:

The emphasis on these elements helps developers systematically evaluate agent behavior, identify areas of weakness, and take the necessary corrective actions before deployment.

Langfuse provides a unit testing framework for AI agents, offers integration tools, monitoring tools, and evaluation tools. The process of establishing tests requires several essential steps:

This systematic process can ensure that testing is standardized, reproducible, and implementable, enabling teams to develop high-quality AI agents.

To achieve the maximum of unit testing, the following best practices have to be followed:

These practices minimize mistakes, enhance reliability, and simplify the creation of AI agents.

Langfuse offers the following features that ease and improve unit testing:

All these aspects support the idea that Langfuse is a complete system for AI agent validation and continuous performance enhancement.

There are distinct differences in the unit testing of an AI agent from the classical testing of software:

Langfuse tools reduce these challenges for logging, metrics tracking, and environment management to ensure testing success.

Mastering AI agent unit testing in Langfuse is essential for developing intelligent, reliable, and high-performing AI systems. By understanding key testing components, leveraging Langfuse features, implementing best practices, and addressing unique AI challenges, developers can ensure their agents deliver consistent and trustworthy results. Langfuse not only simplifies the technical aspects of unit testing but also provides comprehensive tools for tracking, analyzing, and refining agent behavior over time.

Model behavior mirrors human shortcuts and limits. Structure reveals shared constraints.

Algorithms are interchangeable, but dirty data erodes results and trust quickly. It shows why integrity and provenance matter more than volume for reliability.

A technical examination of neural text processing, focusing on information density, context window management, and the friction of human-in-the-loop logic.

AI tools improve organization by automating scheduling, optimizing digital file management, and enhancing productivity through intelligent information retrieval and categorization

How AI enables faster drug discovery by harnessing crowdsourced research to improve pharmaceutical development

Meta’s AI copyright case raises critical questions about generative music, training data, and legal boundaries

What the Meta AI button in WhatsApp does, how it works, and practical ways to remove Meta AI or reduce its presence

How digital tools like Aeneas revolutionize historical research, enabling faster discoveries and deeper insights into the past.

Maximize your AI's potential by harnessing collective intelligence through knowledge capture, driving innovation and business growth.

Learn the LEGB rule in Python to master variable scope, write efficient code, and enhance debugging skills for better programming.

Find out how AI-driven interaction design improves tone, trust, and emotional flow in everyday technology.

Explore the intricate technology behind modern digital experiences and discover how computation shapes the way we connect and innovate.